The starting point of this blog post is a talk that I had with my twitter friend Martin Berger (@martinberx): He suggested me to test the Flex ASM behavior with different ASM disks path per machine.

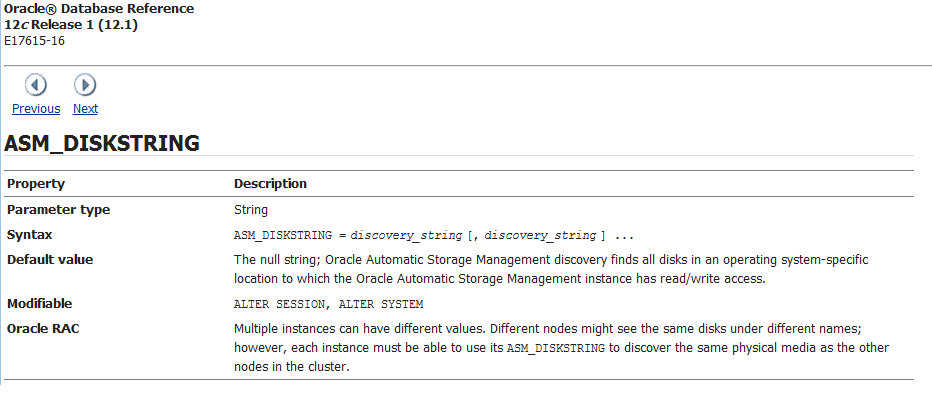

As the documentation states:

Different nodes might see the same disks under different names, however each instance must be able to use its ASM_DISKSTRING to discover the same physical media as the other nodes in the cluster.

Not saying this is a good practice but as everything not forbidden is allowed let’s give it a try that way:

- My Flex ASM lab is a 3 nodes RAC.

- The ASM_DISKSTRING is set to /dev/asm* on the ASM instances.

- I’ll add a new disk with udev rules in place on the 3 machines so that the new disk will be identified as:

- /dev/asm1-disk10 on racnode1

- /dev/asm2-disk10 on racnode2

- /dev/asm3-disk10 on racnode3

As you can see, the ASM_DISKSTRING (/dev/asm*) is able to discover this new disk on the three nodes. Please note this is the same shared disk, it is just identified by different path on each nodes.

On my Flex ASM lab, 2 ASM instances are running:

srvctl status asm

ASM is running on racnode2,racnode1

Let’s create a diskgroup IOPS on this new disk (From the ASM1 instance):

. oraenv

ORACLE_SID = [+ASM1] ? +ASM1

The Oracle base remains unchanged with value /u01/app/oracle

[oracle@racnode1 ~]$ sqlplus / as sysasm

SQL*Plus: Release 12.1.0.1.0 Production on Tue Jul 16 12:03:59 2013

Copyright (c) 1982, 2013, Oracle. All rights reserved.

Connected to:

Oracle Database 12c Enterprise Edition Release 12.1.0.1.0 - 64bit Production

With the Real Application Clusters and Automatic Storage Management options

SQL> create diskgroup IOPS external redundancy disk '/dev/asm1-disk10';

Diskgroup created.

SQL> exit

Disconnected from Oracle Database 12c Enterprise Edition Release 12.1.0.1.0 - 64bit Production

With the Real Application Clusters and Automatic Storage Management options

[oracle@racnode1 ~]$ srvctl start diskgroup -g IOPS

[oracle@racnode1 ~]$ srvctl status diskgroup -g IOPS

Disk Group IOPS is running on racnode2,racnode1

So everything went fine. Let’s check the disk from the ASM point of view:

SQL> l

1 select

2 i.instance_name,g.name,d.path

3 from

4 gv$instance i,gv$asm_diskgroup g, gv$asm_disk d

5 where

6 i.inst_id=g.inst_id

7 and g.inst_id=d.inst_id

8 and g.group_number=d.group_number

9 and g.name='IOPS'

10*

SQL> /

INSTANCE_NAME NAME PATH

---------------- ------------------------------ --------------------

+ASM1 IOPS /dev/asm1-disk10

+ASM2 IOPS /dev/asm2-disk10

As you can see +ASM1 discovered /dev/asm1-disk10 and +ASM2 discovered /dev/asm2-disk10. This is expected and everything is ok so far.

Now, go on the third node racnode3, where there is no ASM instance.

Remember that on racnode3 the new disk is /dev/asm3-disk10. Let’s connect to the NOPBDT3 database instance and create a tablespace IOPS on the IOPS diskgroup.

SQL> create tablespace IOPS datafile '+IOPS' size 1g;

Tablespace created.

Perfect, everything is ok. Now check v$asm_disk from the NOPBDT3 database instance:

SQL> select path from v$asm_disk where path like '%10';

PATH

--------------------------------------------------------------------------------

/dev/asm2-disk10

As you can see the NOPBDT3 database instance is linked to the +ASM2 instance (as it reports /dev/asm2-disk10)

But the NOPBDT3 database instance located on racnode3 access /dev/asm3-disk10.

SQL> select instance_name from v$instance;

INSTANCE_NAME

----------------

NOPBDT3

SQL> !ls -l /dev/asm2-disk10

ls: cannot access /dev/asm2-disk10: No such file or directory

SQL> !ls -l /dev/asm3-disk10

brw-rw----. 1 oracle dba 8, 193 Jul 16 15:35 /dev/asm3-disk10

Ooooh wait ! The NOPBDT3 database instance access the disk /dev/asm3-disk10 which is not recorded into gv$asm_disk.

So what if I launch SLOB locally on the NOPBDT3 database instance, are the metrics recorded ?

First, let’s setup SLOB on the IOPS tablespace:

[oracle@racnode3 SLOB]$ ./setup.sh IOPS 3

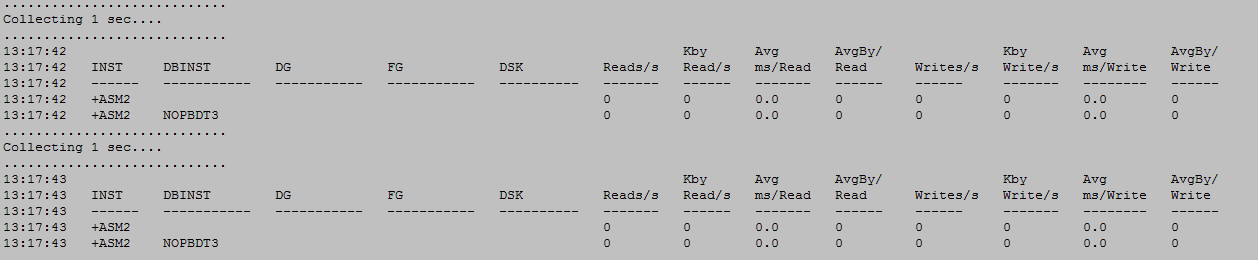

Now, launch SLOB and check the I/O metrics thanks to my asmiostat utility that way:

./real_time.pl -type=asmiostat -show=inst,dbinst -dg=IOPS

With the following output:

As you can see the metrics have not been recorded, while the IOPs have been done (/dev/asm3-disk10 is /dev/sdm):

egrep -i "sdm|device" iostat.out | tail -4

Device: rrqm/s wrqm/s r/s w/s rMB/s wMB/s avgrq-sz avgqu-sz await svctm %util

sdm 0.00 0.00 101.64 0.00 0.79 0.00 16.00 1.80 17.74 6.05 61.52

Device: rrqm/s wrqm/s r/s w/s rMB/s wMB/s avgrq-sz avgqu-sz await svctm %util

sdm 0.00 0.00 109.34 0.00 0.85 0.00 16.00 1.82 16.66 5.70 62.28

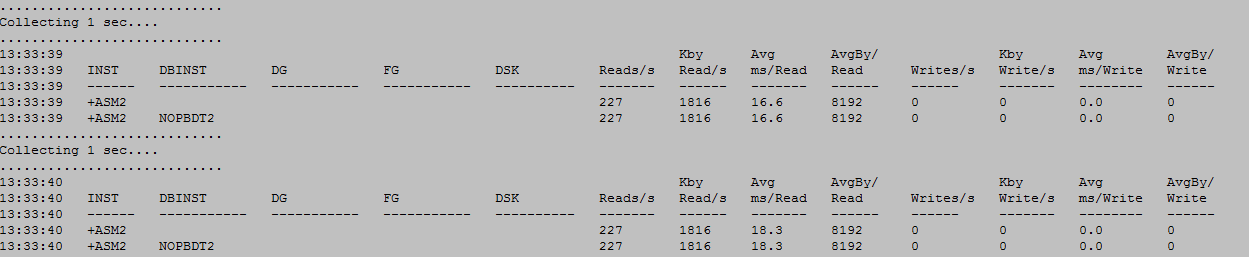

Of course the same test launched from the NOPBDT2 database instance linked to the +ASM2 instance,would produce the following output:

So the metrics are recorded as the database Instance is doing the IOPS on devices that the ASM instance is aware of (NOPBDT2 linked to +ASM1 would produce the “invisible” metrics).

Important remark:

The metrics are not visible from the gv$asm_disk view (from the ASM or the database instance), but there is a place where the metrics are recorded: The gv$asm_disk_iostat view from the database instance (Not the ASM one).

Conclusion:

The “invisible” I/O (metrics not recorded into the gv$asm_disk view) issue occurs if:

- you set different disks path per machine (so per ASM instance) in a Flex ASM (12.1) configuration.

- and the database instance is attached to a remote ASM instance (then using different path).

So I would suggest to use the same path per machine for the ASM disks in a Flex ASM (12.1) configuration to avoid this issue.

Update: The asmiostat utility is not part of the real_time.pl script anymore. A new utility called asm_metrics.pl has been created. See “ASM metrics are a gold mine. Welcome to asm_metrics.pl, a new utility to extract and to manipulate them in real time” for more information.