This article is the third Part of the “A closer look at ASM rebalance” series:

- Part I: Disks have been added.

- Part II: Disks have been dropped.

- Part III: Disks have been added and dropped (at the same time).

If you are not familiar with ASM rebalance I would suggest first to read those 2 blog posts written by Bane Radulovic:

In this part III I want to visualize the rebalance operation (with 3 power values: 2,6 and 11) after disks have been added and dropped (at the same time).

To do so, on a 2 nodes Extended Rac Cluster (11.2.0.4), I added 2 disks and dropped 2 disks (with a single command) into the DATA diskgroup (created with an ASM Allocation Unit of 4MB) and launched (connected on +ASM1):

- alter diskgroup DATA rebalance power 2; (At 02:11 PM).

- alter diskgroup DATA rebalance power 6; (At 02:24 PM).

- alter diskgroup DATA rebalance power 11; (At 02:34 PM).

And then I waited until it finished (means v$asm_operation returns no rows for the DATA diskgroup).

Note that 2) and 3) interrupted the rebalance in progress and launched a new one with a new power.

During this amount of time I collected the ASM performance metrics that way for the DATA diskgroup only.

I’ll present the results with Tableau (For each Graph I’ll keep the “columns”, “rows” and “marks” shelf into the print screen so that you can reproduce).

Note: There is no database activity on the Host where the rebalance has been launched.

Here are the results:

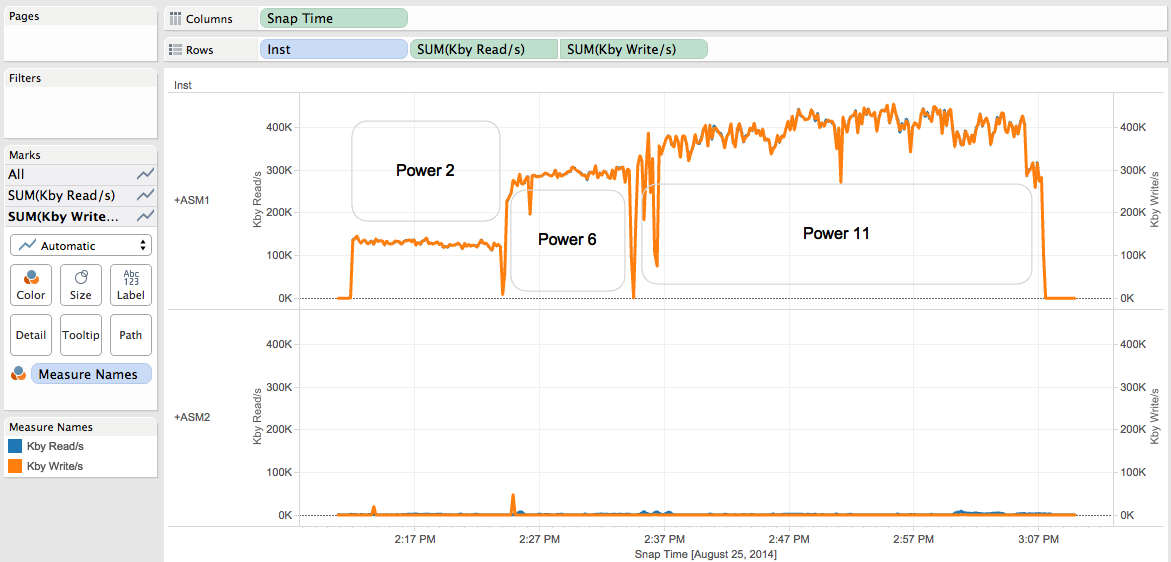

First let’s verify that the whole rebalance activity has been done on the +ASM1 instance (As I launched the rebalance operations from it).

We can see:

- That all Read and Write rebalance activity has been done on +ASM1 .

- That the read throughput is very close to the write throughput on +ASM1.

- The impact of the power values (2,6 and 11) on the throughput.

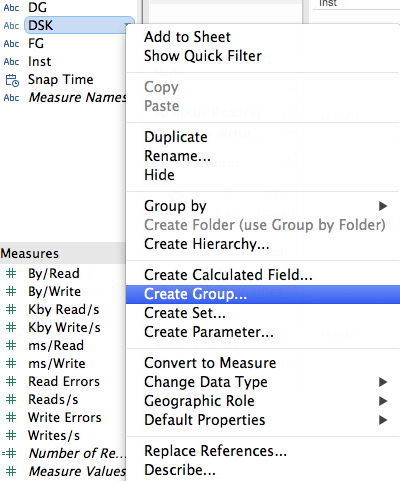

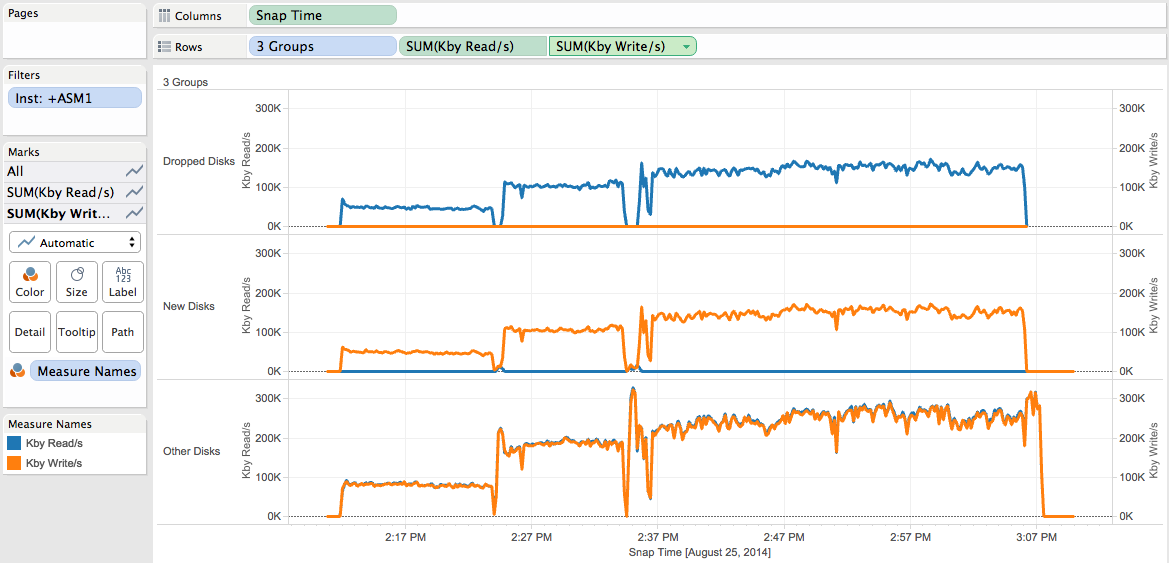

Now I would like to compare the behavior of 3 Sets of Disks: The disks that have been dropped, the disks that have been added and the other existing disks into the DATA diskgroup.

To do so, let’s create in Tableau 3 groups:

Let’s call it “3 Groups”

So that now we are able to display the ASM metrics for those 3 sets of disks.

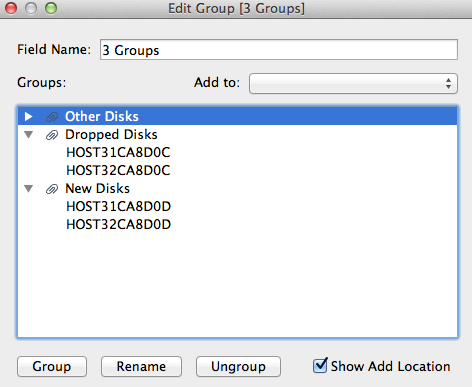

I will filter the metrics on ASM1 only (to avoid any “little parasites” coming from ASM2).

Let’s visualize the Reads/s and Writes/s metrics:

We can see that during the 3 rebalances:

- No writes on the dropped disks.

- No reads on the new disks.

- Number of Reads/s increasing on the dropped disks depending of the power values.

- Number of Writes/s increasing on the new disks depending of the power values.

- Reads/s and Writes/s both increasing on the other disks depending of the power values.

- As of 03:06 PM, no activity on the dropped and new disks while there is still activity on the other disks.

- Are 1, 2, 3, 4 and 5 surprising? No.

- What happened for 6? I’ll answer later on.

Let’s visualize the Kby Read/s and Kby Write/s metrics:

We can see that during the 3 rebalances:

- No Kby Write/s on the dropped disks.

- No Kby Read/s on the new disks.

- Number of Kby Read/s increasing on the dropped disks depending of the power values.

- Number of Kby Write/s increasing on the new disks depending of the power values.

- Kby Read/s and Kby Write/s both increasing on the other disks depending of the power values.

- Kby Read/s and Kby Write/s are very close on the other disks (It was not the case into the Part I).

- As of 03:06 PM, no activity on the dropped and new disks while there is still activity on the other disks.

- Are 1, 2, 3, 4, 5 and 6 surprising? No.

- What happened for 7? I’ll answer later on.

Let’s visualize the Average By/Read and Average By/Write metrics:

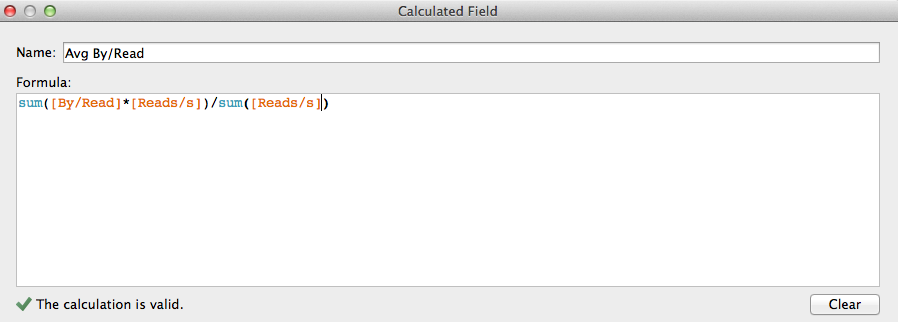

Important remark regarding the averages computation/display: The By/Read and By/Write measures depend on the number of reads. So the averages have to be calculated using Weighted Averages.

Let’s create the calculated field in Tableau for the By/Read Weighted Average:

The same has to be done for the By/Write Weighted Average.

Let’s see the result:

We can see:

- The Avg By/Read on the dropped disks is about the same (about 1MB) whatever the power value is.

- The Avg By/Write on the new disks is about the same (about 1MB) whatever the power value is.

- The Avg By/Read and Avg By/Write on the other disks is about the same (about 1MB) whatever the power value is.

- Are 1,2 and 3 surprising? No for the behaviour,Yes (at least for me) for the 1MB value as the ASM allocation unit is 4MB.

Now that we have seen all those metrics, we can ask:

Q1: So what the hell happened at 03:06 pm?

Let’s check the alert_+ASM1.log file at that time:

Mon Aug 25 15:06:13 2014

NOTE: membership refresh pending for group 4/0x1e089b59 (DATA)

GMON querying group 4 at 396 for pid 18, osid 67864

GMON querying group 4 at 397 for pid 18, osid 67864

NOTE: Disk DATA_0006 in mode 0x0 marked for de-assignment

NOTE: Disk DATA_0007 in mode 0x0 marked for de-assignment

SUCCESS: refreshed membership for 4/0x1e089b59 (DATA)

NOTE: Attempting voting file refresh on diskgroup DATA

NOTE: Refresh completed on diskgroup DATA. No voting file found.

Mon Aug 25 15:07:16 2014

NOTE: stopping process ARB0

SUCCESS: rebalance completed for group 4/0x1e089b59 (DATA)

We can see that the ASM rebalance started the compacting phase (See Bane Radulovic’s blog post for more details about the ASM rebalances phases).

Q2: The ASM Allocation Unit size is 4MB and the Avg By/Read is stucked to 1MB,why?

I don’t have the answer yet, it will be the subject of another post.

Two remarks before to conclude:

- The ASM rebalance activity is not recorded into the v$asm_disk_iostat view. It is recorded into the v$asm_disk_stat view. So, if you are using the asm_metrics utility, you have to change the asm_feature_version variable to a value > your ASM instance version.

- I tested with compatible.asm set to 10.1 and 11.2.0.2 and observed the same behaviour for all those metrics.

Conclusion of Part III:

- Nothing surprising except (at least for me) that the Avg By/Read is stucked to 1MB (While the allocation unit is 4MB).

- We visualized that the compacting phase of the rebalance operation generates much more activity on the other disks compare to near zero activity on the dropped and new disks.

- I’ll update this post with ASM 12c results as soon as I can (if something new needs to be told).